Nov 1, 2019

DeepZine

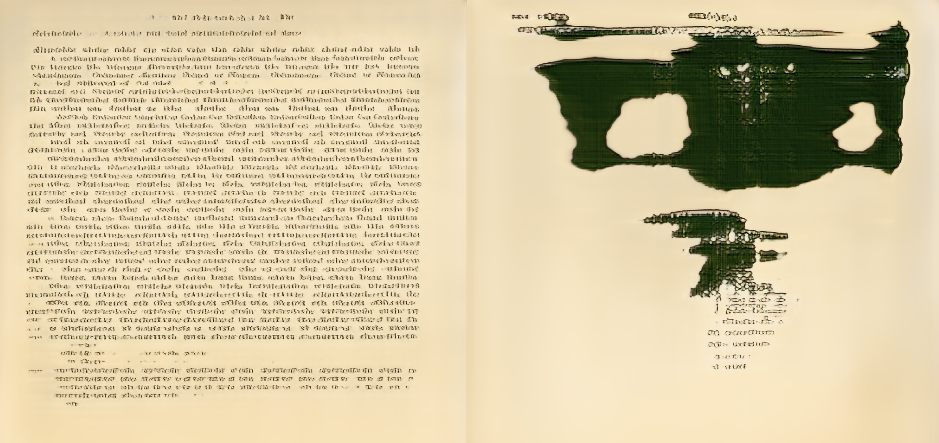

Two images of book pages that were created by a generative adversarial network (GAN).

This project is, broadly, a particular implementation of the Progressively Growing Generative Adversarial Network (PGGAN). This architecture was first developed by Karras et al. in "Progressive Growing of GANs for Improved Quality, Stability, and Variation". The code in this project was based on was developed by the Github user Jichao Zhang's Tensorflow implementation of the PGGAN, although some significant changes have been made since.

While the classic implementations of the PGGAN so far have been to make high-resolution, realistic faces, objects, and memes, this implementation generates synthetic book pages! It does this by downloading a set number of print pages from the Internet Archive using their Python API, preprocessing them into images of a regular square shape, and then feeding them into the original PGGAN architecture. You can read documentation for this project here.

This project was developed as a sort of toy dataset for other work on synthesizing high-resolution medical images using the PGGAN. One of the things I noticed while training medical image PGGANs was that some repetitive overlaid clinical annotations were reproduced letter-for-letter in the synthesized images. I wanted to see what the PGGAN would do on a dataset of purely text. I downloaded archived scientific reports from the Woods Hole Oceanographic Institute, and found the result to be fascinating. Instead of English letters, there were dreamlike pseudo-letters, arranged in fake paragraphs, in fake columns, with fake headers and fake page numbers. Cover pages, tables, and figures swirled together into abstract ink blot pages when there was no text to generate. It was mesmerizing, and I think worth sharing :).

You can see a project report for this portfolio item here: Project Report: Generating Fake Book Pages Using a GAN.